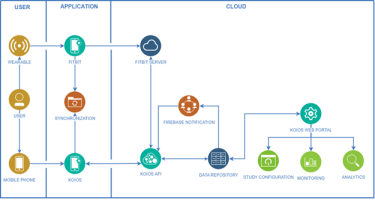

Multimodal Speech Emotion Recognition: From October 2022 to August 2023, we collected speech and wearable data using Fitbit devices and a mobile app from 150 college students. The size and content of this dataset are comparable to, and even superior to, the most widely used datasets in this field. We have utilized this data for the task of speech emotion recognition, but it can also be effectively applied to various downstream tasks in the fields of healthcare and natural language processing (NLP).

Multimodal Detection of Depression Using Commercial Wearables: This project leverages recent public datasets containing Fitbit data labeled with mental health information. We aim to train deep learning models to identify depressive symptoms and combine these models with other sensor modalities, such as voice samples, to enable early detection of depression using only commercial devices.

Depression Detection: Clinical interviews are widely regarded as the gold standard for detecting depression. Traditional methods often rely heavily on specific question-and-answer pairs, which can be impractical and not easily generalizable in real-world clinical settings. In this project, we propose utilizing position encoding and latent space regularization to achieve a comprehensive understanding of the interactions during the interview. By analyzing the audio responses of subjects and the text of the interviewer’s prompts, our model captures the evolving context of the conversation. This approach ensures that the model leverages insights from the entire interview, thus preventing over-reliance on isolated or late-stage cues.

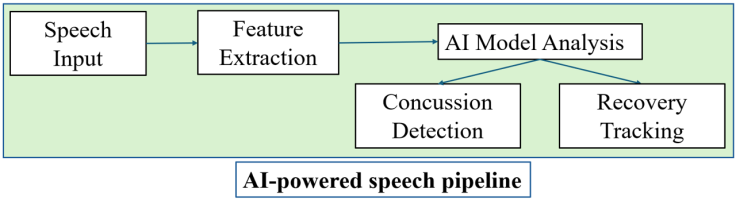

Speech-Based Digital Health Tool for Concussion Detection and Recovery Tracking Using Deep Learning: This project develops an AI-powered speech analysis tool for concussion detection and recovery tracking using deep learning models. By analyzing log mel-spectrograms, the system identifies speech-based biomarkers to detect concussions objectively and monitor recovery over time. Using CNNs and Vision Transformers, the tool enhances diagnostic accuracy beyond traditional self-reports and cognitive tests, making it valuable for neurology, sports medicine, and digital healthcare applications.

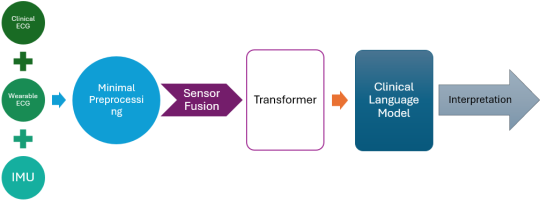

Multimodal AI for Cardiac Insights: Cardiovascular diseases remain a leading global health concern, requiring accurate, real-time monitoring to improve early detection and patient outcomes. This project leverages transformers and LLMs to interpret ECG and IMU data, combining clinical and real-world datasets for robust analysis. It not only provides diagnostic insights but also responds to user queries, offering a comprehensive, interactive tool for real-time cardiac monitoring and personalized health guidance.